AI summarization: An applied scientist’s POV

Senior Applied Scientist

Senior Applied Scientist

Tags

Share

Whether it’s writing meeting minutes or condensing a 30-minute customer call into two or three sentences of notes in your CRM, one of the most time-consuming things that businesses have to do is summarize large chunks of text data into a shorter version.

AI summarization offers a solution to this problem by automatically generating concise and informative summaries of long-form text documents. This technology can be applied to a wide range of applications, including research papers, legal documents, and call transcriptions, and can help users quickly understand the content without having to read the entire document.

Already, there are many AI tools that say they can summarize calls, reports, and other content. But developing effective summarization algorithms isn’t easy, and means having to overcome several technical and linguistic challenges. In this blog post, we'll explore just why AI summarization is so difficult—and why it’s worth doing.

Dialogue summarization and its use cases

In this blog post, we’ll look at AI summarization for dialogues specifically—you could also have an AI summarize things like a research report, long article, or a podcast. But for most businesses, the most important thing they’ll need to summarize is dialogue.

Why? Because dialogue includes one of our most valuable sources of insight: Our everyday customer and team conversations.

So, let’s start by defining AI summarization in regards to dialogue.

Dialogue summarization is the process of extracting the most important information from a conversation and presenting it in a concise and coherent manner.

As you can imagine, this has numerous use cases, especially in contact centers for customer support or sales.

One of the most important use cases for summarization are calls and meetings, especially for longer calls. Participants need to summarize their takeaways, take note of their action items, and share information quickly before moving on to the next thing.

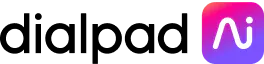

For example, Dialpad’s Ai Recap feature provides generative call summaries (leveraging ChatGPT) to make your team meetings and customer conversations more productive, accessible, and actionable:

Whether you’re in sales, customer support, or any other part of the business, having access to a summarization tool (like Ai Recap) is huge.

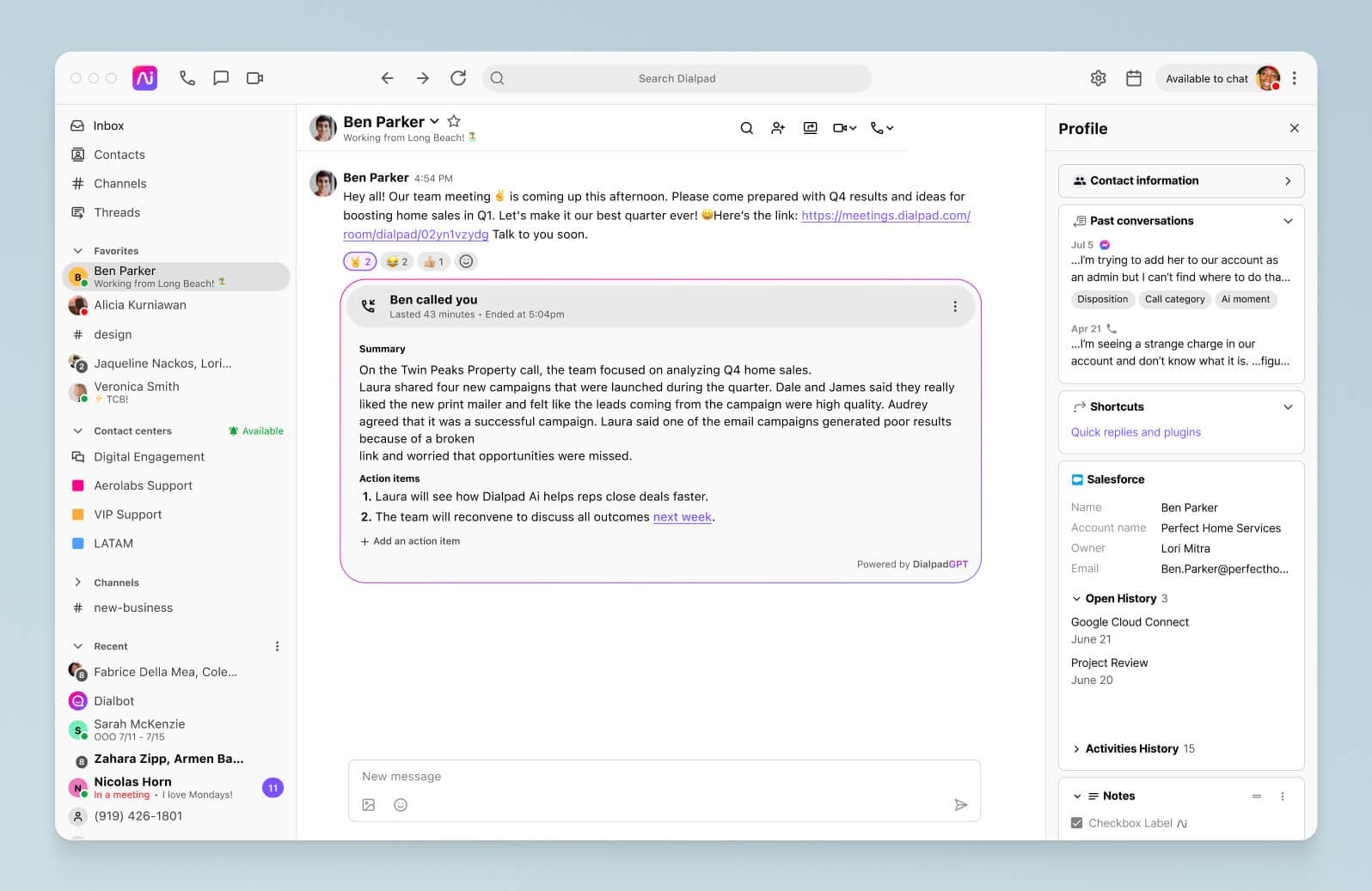

For example, customer support reps deal with a high volume of calls daily. Having Ai Recap summarize key information allows them to wrap up calls quickly and easily so that they can move on to another customer on the line:

Similarly, sales reps may use summarization to help automate note-taking during the call, capturing things like the prospect’s interests and pain points, so they can know exactly what to focus on in their follow-up touches.

At the same time, AI-generated summaries can help contact center managers understand the topic of any call at a glance, and help them decide how to coach agents. Are a lot of customers calling to cancel your service? Are you seeing more pricing conversations?

Summarization makes it easier to uncover this information without having to read an entire transcript or listen to the whole call recording.

Challenges in dialogues generated by ASR systems

Unlike written text, dialogues (or spoken language) between humans often have several unique challenges that make summarization difficult:

Speech overlaps: Occur when multiple speakers talk at the same time, making it difficult to identify who said what.

False starts: Situations where speakers begin a sentence but stop midway or start with incorrect words.

Repetitions: Happen when speakers repeat the same words or phrases.

Dysfluency: Situations where speakers stutter or pause frequently, making the dialogue hard to follow.

All this results in mistranscription—when the ASR system incorrectly transcribes words, leading to inaccurate information.

At Dialpad, we have in-house automatic speech recognition (ASR) systems that produce conversation transcripts in real time, and although we have one of the most accurate transcription engines out there, we still see mistranscription happen from time to time. That speaks to the many irregularities, slang, and unique speech patterns that humans have!

Common metrics of evaluating summary quality

In the realm of AI, it's vital to accurately gauge the performance of machine-generated output. When it comes to assessing the quality of an AI-produced summary, we can rely on a few important metrics:

Factuality: Zooms in on the accuracy of the information encapsulated in the summary. A high-quality summary must be factually correct to be of any real value.

Informativeness: Measures the amount of information the summary and how effectively it conveys the essence of the original dialogue. A great summary should hit all the crucial points without losing the core message.

Consistency: This evaluates the coherence and logic of the summary. It's crucial to ensure the summary is free from contradictions or irrelevant information, maintaining a solid narrative throughout.

Fluency: Fluency is all about readability and grammatical correctness. A top-notch summary should be easy to read, smoothly flowing from one sentence to the next.

When it comes to measuring fluency, ROUGE scores are our go-to metric. ROUGE scores are calculated by comparing the AI-generated summary to a reference text—like a human-generated summary, for example. The higher the ROUGE score, the more similar the generated summary is to the reference.

However, factuality is a bit more elusive. For example, a summarization algorithm may identify dialogue utterances containing a factual statement, but without understanding the context, it could be missing important details or produce a summary that simply isn’t accurate.

Similarly, some factual information might be implicit or inferred from the original dialogue, and an algorithm might not be able to identify and accurately represent this information in a summary.

Let’s illustrate this with an example:

Say you come across a news recording about a new COVID-19 vaccine. You use an AI summarization tool to quickly get the key points. The summary you receive is:

"New COVID-19 vaccine has a 100% success rate."

However, later you find out that the AI summarization tool didn’t include important details from the original source, like the fact that the vaccine's success rate was based on a small sample size of only 100 participants and that further testing was needed to confirm its effectiveness.

This example shows how a summarization algorithm can create factual inaccuracies by omitting important details from the original text, which can lead to incorrect interpretations and potentially harmful actions.

Long story short: Researchers are still on the hunt for the perfect metric to measure factual consistency effectively.

As we continue to refine our evaluation methods, we can expect even more precise measurements of AI-generated summaries, pushing the boundaries of what AI can achieve in the field of summarization.

Overcoming the challenges of AI summarization

Okay, so we covered some of the biggest challenges that can impact the quality of dialogue summarization. Now, we’ll discuss potential solutions and strategies to overcome them.

Tackling ASR-generated dialogue Issues

To mitigate the consequences of using ASR-generated dialogues, we can invest in refining ASR technology and algorithms, improving their ability to handle overlaps, false starts, and repetitions.

Furthermore, advanced post-processing techniques can be employed to rectify mistranscriptions and disfluencies in the transcripts.

Contextual understanding

Summarizing spoken language can be complicated due to the presence of colloquialisms and contextual dependencies. Developing AI models that can better understand context and informal language will lead to more accurate and meaningful summaries.

Continuous model improvement

As AI models are trained on larger and more diverse datasets, they can better learn the nuances of human conversations. Continuous improvement of these models, alongside regular evaluation using metrics like factuality, informativeness, consistency, and fluency, will contribute to higher-quality summaries.

Human-in-the-loop

Incorporating a human-in-the-loop system, where AI-generated summaries are reviewed and refined by human experts, can provide valuable feedback for AI model improvement. This collaboration ensures the highest quality summaries while reducing the burden on human experts.

By addressing these challenges and harnessing cutting-edge advancements in dialogue summarization techniques, we can elevate summary quality to unprecedented levels. This will not only enhance the utility of dialogue summarization in various industries but also contribute to the ongoing evolution of AI-driven language understanding and processing.

How Dialpad is driving better summarization

At Dialpad, we’re working towards building better summarization systems by leveraging existing state-of-the-art large language models. At the same time, we’re building more advanced approaches to accurately measure summary quality and reduce the effort required to fix it.

💡 FUN FACT:

Dialpad has amassed almost 4 billion minutes of transcription and voice, 5 billion Ai events categorized, and 145 million Ai recommendations. Dialpad's Ai Recap incorporates this data alongside ChatGPT to bring accuracy and relevance to natural-sounding summaries.

Get on the waitlist for Ai Recap

Save your spot on the waitlist to try out Dialpad Ai Recap, or book a demo with our team to see how it works!