Making a sentiment model explainable: A deep dive into AI

Applied Scientist, AI Engineering

Tags

Share

We spend a great deal of time in our lives unearthing the motives of others. (“Why’d she say that?”)

We make our way through the world often baffled by what other people say and do and resolve our gaps in understanding either through assumption and attribution ("I’m sure it’s because I was late yesterday.") or through inquiry, both indirect ("What on earth is her problem?") and direct ("I’m not leaving till you tell me why you said that").

In other words, we imagine why or we ask why.

As artificial intelligence becomes more ubiquitous, it’s only natural that we begin to wonder about its motives. Yes, this is partially because we are afraid that a superintelligence that will enslave us all might be evolving out of AI and want to see it coming.

But crucially, it is also because the mechanics of this new intelligence is so alien to us. We are interested in learning how it works. If it is making decisions that are consequential to our lives, it’s only natural to be interested in knowing how it arrives at these decisions.

We want to know, as we often do of humans:

Are the decisions correct - How often is the AI wrong? Are the decisions based on all the relevant information?

Are the decisions fair - Does the AI exhibit harmful societal biases so common to humans?

Are the decisions reliable - Will the AI render consistent decisions every time it encounters similar kinds of input?

Are the decisions defensible - Can the decisions be explained to us?

Are the decisions certain - How confident is the AI of a particular decision?

Understanding is a prerequisite to building trust.Using algorithmic techniques to understand the decisions of AI is called AI explainability. At Dialpad, we use explainability techniques to help our customers understand the output of the deep learning models they interact with through product features. We also use these techniques to improve our deep learning models.

In this blog post, we'll do a deep technical dive into how we built Dialpad's sentiment analysis model. If you're interested in the inner workings of AI, keep reading.

Explainability at Dialpad

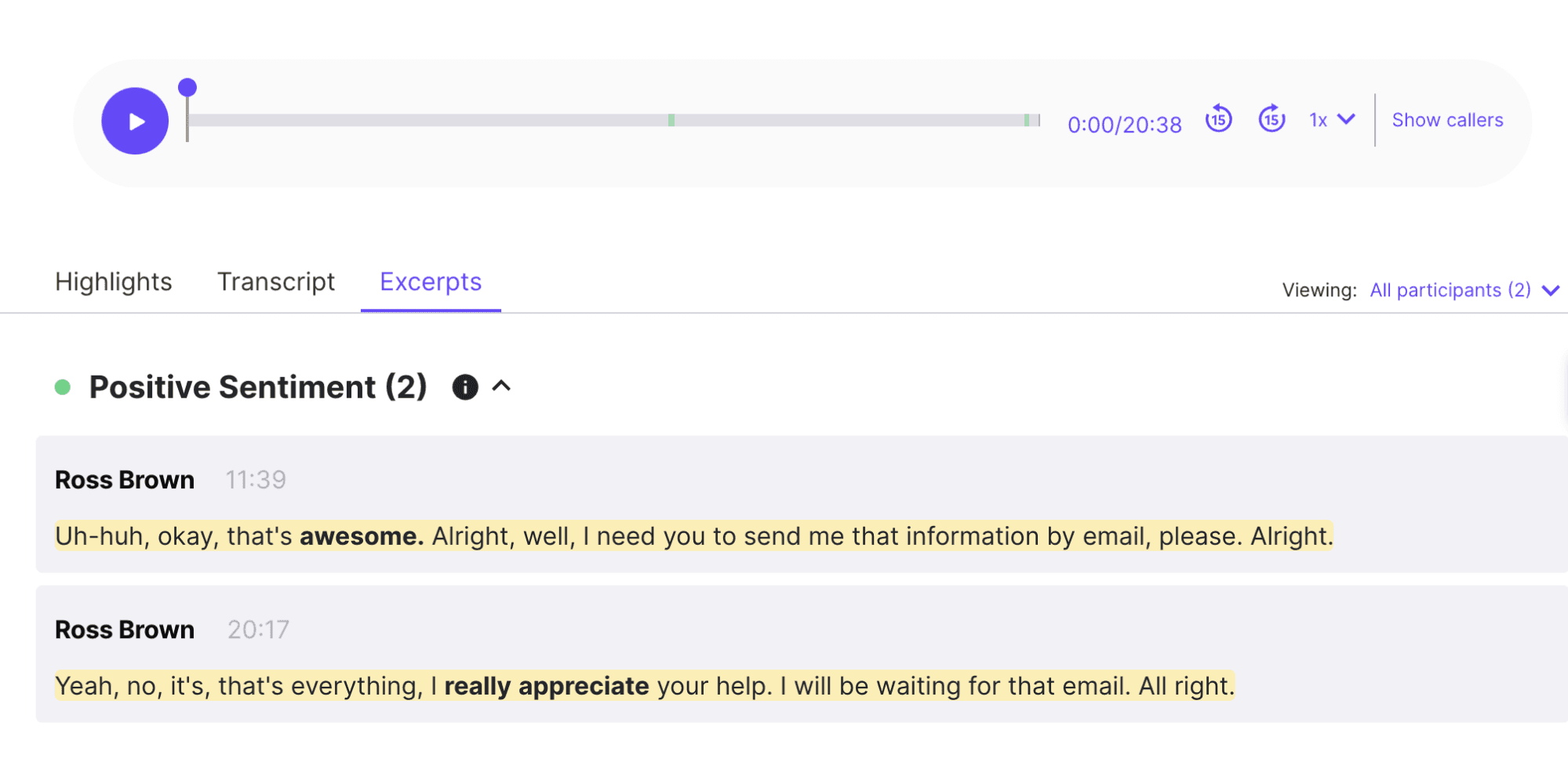

Let's take the case of the sentiment analysis model that is used in the product and in which explainability plays an important role. The sentiment analysis feature picks out parts of a call transcript that exhibit any positive or negative feeling.

Positive sentiment identifies and highlights whenever a speaker says something to indicate that they are very happy or pleased with something.

An example: "We loved the demo, it was very informative."

Negative sentiment identifies and highlights whenever a speaker says something to indicate that they are very frustrated or annoyed with something.

An example: "This isn’t working and I’m getting really frustrated."

A common user complaint with regards to an older version of our sentiment analysis feature was that they didn’t understand why certain sentences were tagged as positive or negative sentiment.

This was especially true in cases where the sentences were very long or where the sentiment expressed was less obvious. Additionally, some sentiment judgements are subjective value judgements. For instance, how sensitive should a sentiment analysis model be to swear words?

Some people frown upon the usage of any swear words at all in a business communications setting and view them as “negative” while others evaluate their appropriateness based on context or do not mind them at all. The “correctness” of the sentiment analysis in this case depends on the perspective of the user.

Sometimes though the model was just plain wrong. For instance, current day sentiment analysis models perform quite poorly on sarcasm, often marking as positive sentiment, because of the usage of “positive” words, something that is obviously (obvious to humans anyway) said in contempt.

Many of these grey areas exist in the task of sentiment detection. In order to build trust in the sentiment analysis feature, we needed to make the deep learning model that underlays it explainable.

How to interrogate an AI

When we prod

When we prod a deep learning model to explain itself, what intricacies of a model’s functioning are we hoping to uncover? We might want to know:

Which elements of the input data were most consequential to the decision made. For instance, in a deep learning model that aids a self-driving car in perception, we might want to know which parts of a camera image most commonly help the model distinguish between such similar looking objects as a street light pole and a traffic light pole.

What intermediate tasks does a model need to learn in order to achieve its stated goals. For instance, does a model need to learn about parts of speech in order to learn how to summarize a piece of text? We might also want to know which parts of the model specialize in performing these intermediate tasks.

Why the model fails, when it does. When a voice assistant fails to understand what was said to them, what about that particular input or the interaction between that input and the model caused this failure.

Are there certain identifiable groups of input that the model performs poorly on? This line of inquiry is crucial to identifying if your model has learned harmful biases based on gender, race and other protected categories.

When they respond

There are two broad types of deep learning explanations:

Global explainability/Structural analyses: This involves studying the model’s internal structure, detecting which input patterns are captured and how these are transformed to produce the output. This is akin to getting a brain MRI or EEG which allows you to peer into a brain, see how it’s wired, study individual component parts and their response to different stimuli, take note of structural and operational faults and so on.

Local explainability/Behavioural analyses: This involves deducing how the model works through observing how it behaves for single, specific instances. This is similar to psychological experiments that involve asking participants to perform specified tasks before and after interventions in order to study the effects of said interventions.

The art of asking

There are several techniques you can use to inquire into the workings of your deep learning models. While the mechanics of these techniques are beyond the scope of this post, I will attempt to summarize the main categories of inquiry:

Explaining with Local Perturbations: In this line of inquiry, we alter, very slightly, different sections or aspects of individual inputs to see how this affects the output. If one section or aspect of the input - an identifiable group of pixels, a particular word - produces a demonstrable change in the output, one could conclude that those parts of the input are most consequential to the output. For instance, in an automated speech recognition model, a model that transcribes spoken speech to text, dropping small sections of audio one by one, from start to end, and seeing how this intervention alters the transcribed text will tell you which parts of a particular audio file are the most important to its transcription.

This category of techniques will give you local explanations. Techniques in this category include Gradients, Occlusion, and Activation maximization.

Explaining with Surrogates: With this category of techniques, we build simpler, tree-based or linear models to explain individual predictions of more complex deep learning models. Linear models give us a very clear idea of which input features are important to a prediction because they essentially assign weights to different input features. The higher the weights, the more important the features.

This category of techniques will give you local explanations. Techniques in this category include LIME, SHAP, and SmoothGrad.

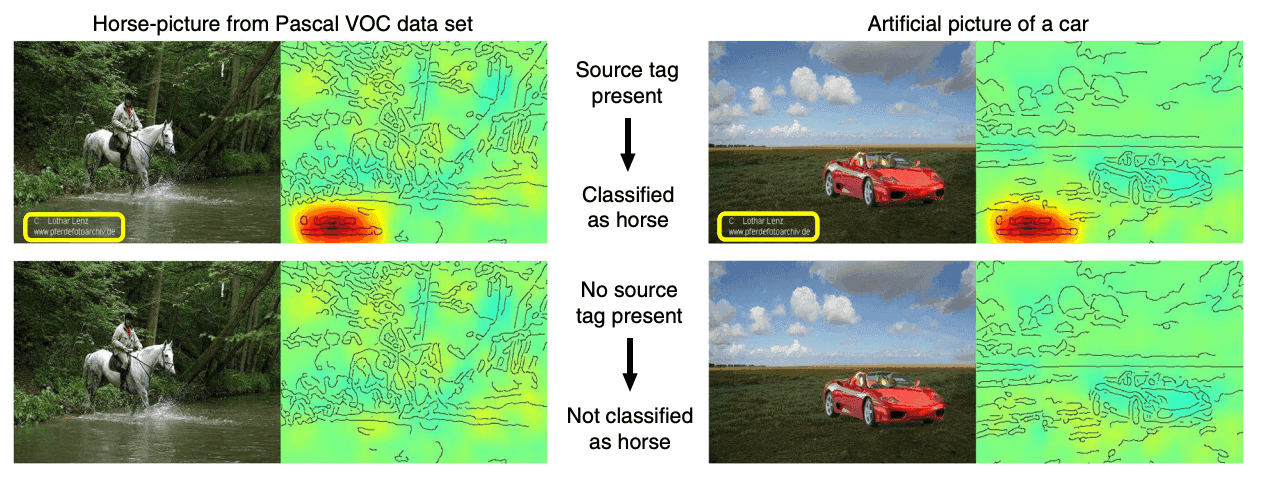

Propagation-Based Approaches (Leveraging Structure): Here, we delve deep into model structure and study the contribution of individual neurons to learn which neurons and layers affect the output the most. Using a technique called Layer Relevance Propagation (LRP), it was discovered that a classification model trained on a popular dataset of images only learned how to recognize a horse because of a source tag present in about one-fifth of the horse figures. Removing the tag also removes the ability to classify the picture as a horse. Furthermore, inserting the tag on a car image changes the classification from car to horse.

Explainable models can help model builders detect such faulty correlations and correct for them.

This category of techniques will give you global explanations. Techniques in this category include LRP, Deconvnet, and Guided backpropagation.

Meta-explanations: We use techniques to discover related or foundational tasks that the model must learn first inorder to do its job. To do this, we plug into the intermediate layers of the model, layers that lie in between the input and the output, and ask them to perform various tasks of interest. For instance, authors of a study discovered that BERT’s intermediate layers encode a rich hierarchy of linguistic information such as learning whether the nouns in a sentence are plural or singular, whether the tense of a verb is past, present or future, whether the type of verb and the subject of a sentence match. They concluded that the learning of these skills seem to be a prerequisite to performing high level natural language tasks such as text classification and producing coherent text.

This category of techniques will give you global explanations. Techniques in this category include SpRAy and Probing.

Making our sentiment model explainable

Step #1: Decide on the kind of explanation

As described in the section above, explanations can be global (structure-based) or local (prediction-based) in nature. We wanted to help users understand individual sentiment predictions so we chose to use local explainability techniques, ones that specialize in explaining individual predictions.

Step #2: Decide on the form of explanation

Next, we had to decide what form the explanation should take. Should it be visual, textual, tabular, graphical? What form of explanation would be both easy to understand, and likely to answer users’ questions of the output? We decided to show users which words were the most influential in compelling the model to label a sentence as positive or negative sentiment. We decided to highlight these words in the product. User studies indicated that this form of explanation was the most helpful:

In a sense, an aggregation of this sort of explanation provides users with a window into the worldview of the sentiment model—what kinds of negative emotion does the model find particularly noteworthy, how well does it understand figurative language like metaphors, what habitually confuses it.

Step #3: Select an explainability technique

The technique you choose will depend on the kind of deep learning model you’ve built. Some techniques are model agnostic, i.e, they work with every kind of model, while others are model specific. We tried out various techniques, conducted many human evaluations of the output and selected one, in the local perturbations [link] family of techniques, that gave us the most intuitive and informative explanations.

There were several design choices we had to make along the way - for instance, the technique we chose returned a weighted list of the words and phrases most important to the prediction made. We had to make a choice - should we show the user all the important words, maybe use a gradient of color to indicate which words were more and less important, or show them only a subset of words. In the end we defined a threshold and decided to highlight only the most important words. We also compiled a list of formatting rules that cleaned up and completed explanations - for instance, highlight the full phrase if a majority of the words in the phrase are considered important.

Any technique you use for individual predictions will have to be integrated into the model inference code and will execute after the model has made the prediction, because you are in fact explaining that prediction. There are a number of opensourcepackages you can adapt to explain your model.

Step #4: Optimize the technique

We had to optimize the technique chosen because the sentiment feature has to be fast and nimble enough to operate in a realtime environment and to the time taken to make a prediction, we were now adding an additional task of explaining the prediction. You could optimize by cutting out extraneous operations, using more efficient libraries and functions for intermediate tasks or even altering the technique to return faster results.

Our most important learning?

It is crucial to keep your audience front and center and base decisions like the form and type of explanation on what that audience is likely to appreciate. An explanation rendered for the purposes of meeting regulatory standards is likely to be of a much different nature than an explanation rendered for the education of everyday users.

Our users have found that this feature upgrade helped them better understand and trust the sentiment analysis feature and its usage and visibility in the product has increased.

Explainability helped us build a better model as well. Through various explainability techniques, particularly an aggregation of local explanations, we were able to identify and address:

The causes of false positives, times the model fired when it should not have.

Biases in the data that might cause unfavorable outcomes.

Conclusion

Understanding the inner workings of AI serves many purposes, each furthering the reach and effectiveness of AI. We must understand to build better and fairer models, to build trust and to build a digital future that is inclusive and committed to human flourishing.

See how Dialpad Ai works

Book a demo with our team to see how Dialpad Ai provides real-time insights and assistance for agents, or take a self-guided interactive tour of the app on your own!