Tags

Share

Language lives in society, and so must we. (Rickford & King, 2016)

When we build artificial intelligence (AI) solutions and machine learning (ML) models, usually the underlying goal is to make life easier for society in some way.

This is a wonderful goal, and it’s important to keep in mind that no member of our society should be excluded from using our products successfully. The thing is, people are beginning to realize that, if we aren’t careful when we sample our data, design our models, and test new features, we run the risk of overrepresenting speakers from certain regions, ethnic groups, genders, or income brackets. We want to be sure our products work well for all users—language lives in society, not just the Bay Area.

Dialpad’s ethics guidelines

Ethical AI—or rather, lack thereof—has been very much in the spotlight in recent days. The industry has placed a heavy focus on LLMS (large language models) like ChatGPT, which, while extremely powerful, are also notoriously susceptible to producing not only misinformation, but racist, sexist, homophobic, and otherwise harmful output as well. So while these models allow us to make great improvements to our products, with that power comes the responsibility to ensure that those products are safe and helpful for our users. This responsibility begins at the very heart of LLMs: with the data used to train or fine-tune them.

There are many ways in which your data, including language data like the kind we work with at Dialpad, can be appropriate or inappropriate. Recently, the tech industry has been learning that one of these ways is sociolinguistic: sometimes language models are not very inclusive of different groups.

At Dialpad, we believe it isn’t enough for our artificial intelligence technology to serve a single demographic alone: we’re always working to make it inclusive to all.

In order to design more ethical, inclusive models from the get-go—and to improve on the models that we already have—we’ve created the following guidelines for incorporating ethics and inclusivity into our AI:

Fairness & Inclusiveness:

We recognize sociolinguistic differences, and work to incorporate as many as we are able into our ML models.

User-focused Benefit:

Our technology is designed to facilitate human performance, not replace it; we don’t use our tech or your information to exploit you or benefit third parties.

Accuracy & Objectivity:

We have trained linguists and skilled and diverse annotators on our team, who ensure that input and output data are as accurate and objective as possible. We have internal versioning tools that allow for easy updates as our data are continuously improved on and augmented.

Security & Safety:

We know our data are extremely valuable and vulnerable; we believe that ethical use of this data requires the implementation of effective and constantly-evolving security measures.

Privacy & Control:

We also value our users’ individual privacy: you control your own data, and what data Dialpad has access to.

Explainability:

While machine learning models can be somewhat opaque, we make use of the latest advances in tech to ensure that our models are transparent—we know why and how they make the decisions that they do.

Accountability:

We take care to know our data and models well enough to understand where they’re lacking, and we are accountable to make regular improvements as frequently as possible.

These principles are ones we strongly believe in, and we’d like to help educate others on how we implement these kinds of guidelines into our tech.

In this introductory post for the Ethics at Dialpad series, we’ll take a deep dive into the first principle: Fairness & Inclusiveness.

Ethics deep dive: Fairness & Inclusiveness

Punctuating sentences

Let’s consider a model design that, to a layperson, seems fairly basic at first glance: adding punctuation to call transcripts. This task would be extremely tedious for a human, so it makes sense to automate it with machine learning.

In this example, to train a machine learning model to punctuate sentences, you need to feed it language data. Training data should be correctly punctuated already, and you will need examples of both long and short sentences.

You will also need sufficient data coverage of every punctuation mark you want the model to “learn”, at similar rates to what you’ll see in your test data; if you train the model on sentences with only periods and commas, it will never correctly punctuate questions. If you train the model on only short sentences, you’ll likely get results that are under-punctuated—your model never “learned” how to break up long sentences using commas.

But there are sociolinguistic factors to consider as well. Language lives in society, so there’s more at stake than your English essay: language has a real impact on how people interact with not only products, but also each other.

In order to achieve this, you must consider your training data. For example, a standard training database for language-based ML models is the wealth of text available on Wikipedia. But if you train your punctuator on Wikipedia alone, you’ve given it these traits: written text, in a formal register of Standard American English, composed mostly of declarative sentences, the authors of which are mostly white men. (Further reading here, here, and here.)

If this is the only data you use to train your model, it probably won’t perform as well on texts with the following traits:

non-American varieties of English,

non-standard American varieties of English,

questions,

informal language,

spoken language (like phone calls),

conversational language, and

language associated with being non-white and/or non-male

Our own work on punctuation highlighted a mismatch between the training data we were using and the data we had in our transcripts, which resulted in low accuracy for predicting where to insert question marks. Rather than continue to work with inappropriate data to the detriment of many of our customers, we instead developed methods to select relevant data from transcripts, and added more appropriate data from other sources, including more human-transcribed data.

The result is that our punctuator model performs incredibly well on our actual data from our actual customers—rather than performing well only on, for example, the language of Wikipedia. (You can learn more about the type of data we used for our punctuator here.)

Sentiment analysis

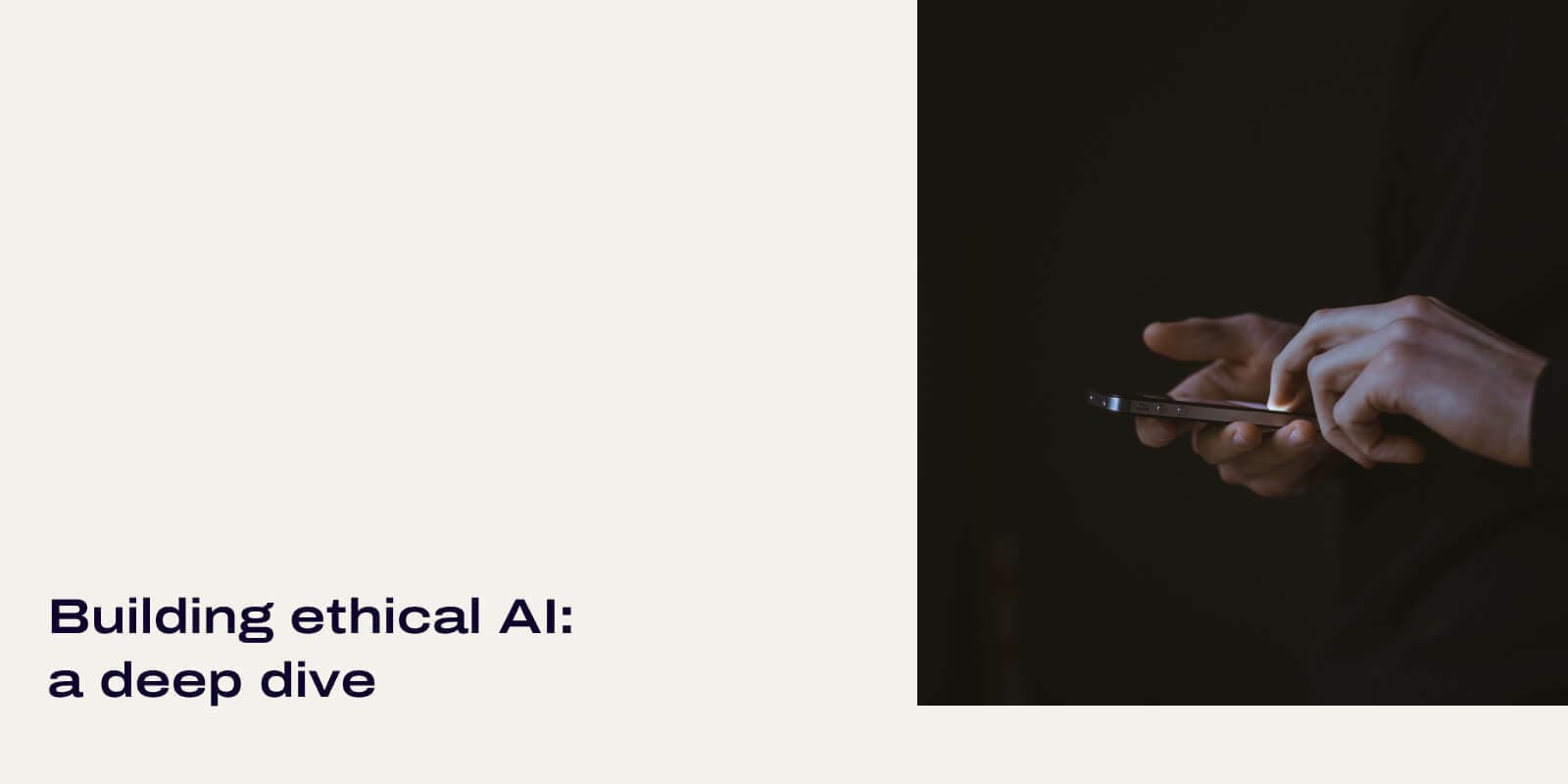

Another case study in implementing fairness and inclusiveness is sentiment analysis: in order to train a machine learning model to recognize positive and/or negative sentiment in textual data, you will need to provide appropriate training data, in terms of both social and linguistic contexts.

This is the same concept we used in the punctuator example above, but the details look different. Instead of correct sentence length and punctuation types, you will need to look at the meanings of your sentences to ensure that you have examples of positive, negative, and neutral sentiment (this last type should be included because not everything we say contains some kind of positive or negative feeling).

For example:

Now for the sociolinguistic context: what language are you working with? Have you considered the culture in which your sentiment labels will exist? Have you considered regional linguistic variation? These questions are relevant to both the automatic speech recognition (ASR) and natural language processing (NLP) aspects of Dialpad Ai.

For example, if your ASR model is trained on US English alone, you may see transcription errors when you process other English varieties. In this example, some key differences to consider between US and Australian English are the pronunciation of r in certain linguistic contexts, and vowel pronunciation differences in words like mate.

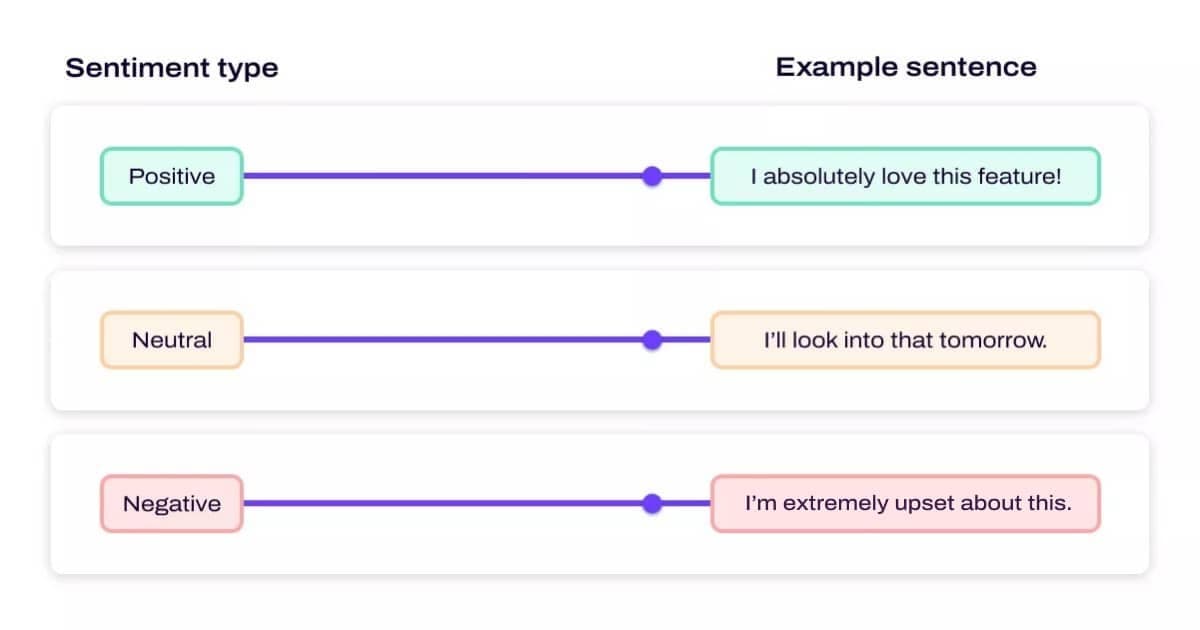

Here are just a few examples of words that anyone might say during a call. These words, with an ASR model trained only on North American English varieties, might have sentiment-al consequences for Australian English speakers:

From the NLP side, you should consider whether linguistic cues to negative sentiment are even the same across cultures: for example, does swearing convey the same sentiment in US English as it does in Australian English? Spoiler: it doesn’t, and you’ll have to account for that.

Similarly, we also noticed an effect on positive sentiment: there are differences in politeness strategies in Australian English compared to US English, which affects positive sentiment indicators such as thank you and have a great day.

To address these sociolinguistics differences, as we started work on expanding our coverage to Australian English, we made improvements to both our ASR and NLP sentiment models, retraining with additional Australian English data. The result of these efforts is improved accuracy and performance of our AI on Australian calls.

Tips and tricks

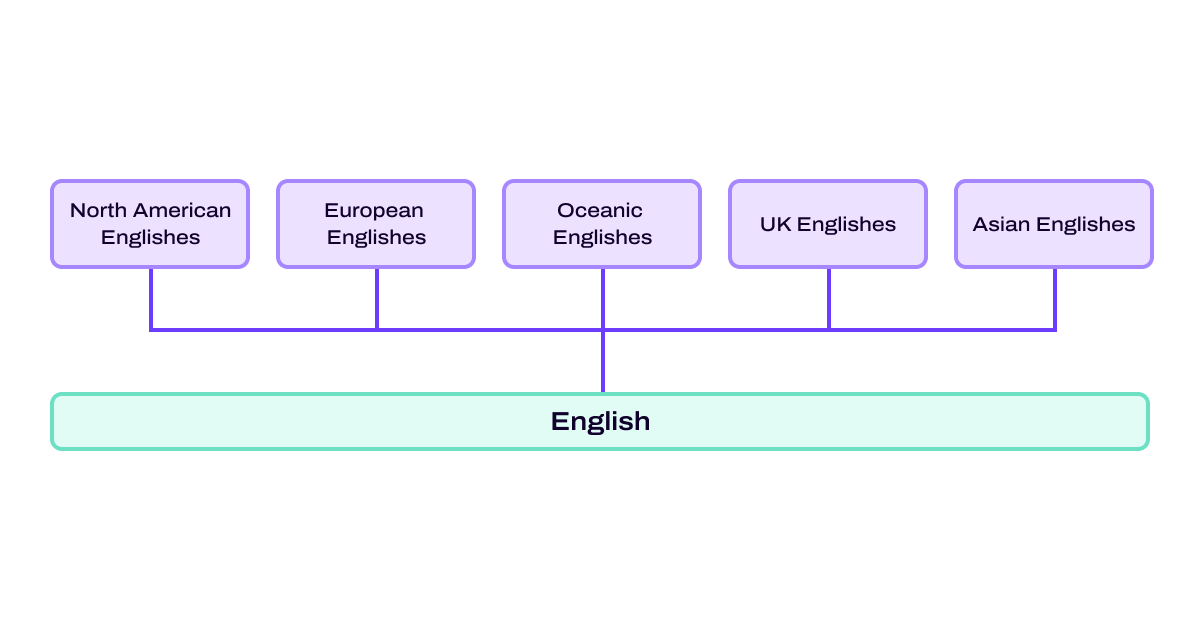

The following is a chart of some of the most widely-spoken English varieties, or dialects:

And these are only a few of the many Englishes—these are quite a few dialects to consider! While you may not have access to training data for every single English variety, a good first step to take is to be sure you’ve got appropriate data from the language varieties of the people you want your product(s) to serve. For example, if you have no customers in the North American market, but many in Australia, then you will want to focus your efforts on getting good coverage across all varieties of Australian English, and across as many different Australian demographics as possible.

If you’re not sure whether your model has good demographic coverage, just ask! At Dialpad, we are always collecting feedback from our customers regarding our transcript quality and Vi features, which tells us how to improve. For example, we received feedback from customers in Louisiana about issues of transcript quality impeding their use of our products. On receiving that feedback, we augmented our data to better represent this demographic, retrained our models, and the transcript quality for these customers was vastly improved.

Being mindful of inclusiveness isn’t always as large-scale as retraining your whole model, either: there are many ways you can adjust your algorithms for simple solutions. Something as small as allowing dates to be formatted in dd/mm/yyyy order—which is much more common in Australia than the typically American mm/dd/yyyy format—can go a long way towards increasing inclusiveness and improving the customer experience.

Moving forward

These are just a few examples of what to consider when designing your AI, and for ease of explanation, we have only focused on English here, but the same concepts and principles would apply to AI for other languages even if the specific implementation may be different.

While integrating ethical principles into your tech can seem daunting at times, these examples should give you some hope that it is not only possible, but entirely doable, to create ethical tech. Products should work for the people of the society they were made to serve; our goal at Dialpad is to make sure that our products continue to do so effectively, fairly, and inclusively.

Stay tuned for our next instalment of the Ethics at Dialpad series, which will focus on the principle of Explainability.

See how Dialpad Ai works

Book a demo to see how Dialpad Ai can provide real-time insights and assistance for agents, or take a self-guided interactive tour of the app on your own first!